How to Build a RAG System with a Self-Querying Retriever in LangChain

Original post: click here

Idea

Why can’t I use natural language to query a movie based more on the vibe or the substance of a movie, rather than just a title or actor?

e.g. search: I liked “Everything Everywhere all at Once”, give me a similar film, but darker.

Action: Build Film Search

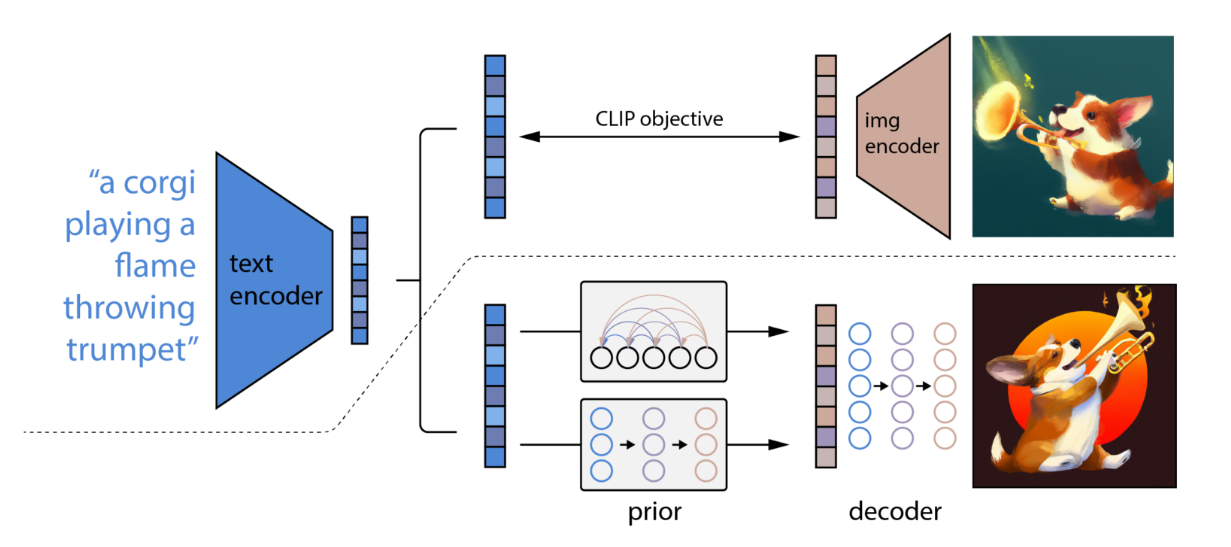

RAG-based system, takes a user’s query, embeds it, and does a similarity search to find similar films.

Different to vanilla RAG: using self-querying retriever.

Ability: allow filtering movies by their metadata, before doing a similarity search.

e.g. query: “Recommend horror movies made after 1980 that features lots of explosions”

The search will first filter out all films that are not “horror movies made after 1980”, before doing a similarity search for films that “feature lots of explosions”.

Retrieve the Data

Data: The Movie Database (TMDB)

pulled film attributes from their API.

upload documents to Pinecone.

convert each CSV file to a LangChain document, then specify which fields should be the primary content and which fields should be the metadata.

DirectoryLoader from LangChain

takes care of loading all csv files into documents.

specify:

- page-content: (overview + keywords) will be emebeded and used in similarity search during the retrieval phase.

- metadata: solely for filtering purposes before similarity search is done.

SQLRecordManager ensures that duplicate documents are NOT uploaded to Pinecone.

Embedding model: text-embedding-ada-002 OpenAI

Creating the self-querying retriever

allow to filter the movies that retrieved during RAG via metadata.

- choose vector store, make sure it supports filtering by metadata.

- what types of comparators are allowed for each vector store.

e.g. comparator “eq”, eq(‘Genre’, ‘SciFi’)

- Few-shot learning, feed the model examples of user queries and corresponding filters. LMs can use their “reasoning” abilities and world knowledge to generalize from these few-shot examples to other user queries.

- model has to know a description of each metadata fields.

Creating the chat model

build self-querying retriever -> build standard RAG model

Define chat model (summary model):

takes in a context (retrieved films + system message)

respond with a summary of each recommendation

keep low cost: GPT-3.5 Turbo, keep best result: GPT-4 Turbo

Issue:

presented with no film data in its context, the model would use its own(faulty) memory to try and recommend some films. Not good.

Limitations of this film search

No saving recommendation histories.

Need to update raw data weekly.

Bad metadata filtering by the self-querying retrieval.

e.g. “Ben A. films” could mean “Ben A. is the star” or “Ben A. directed”

Clarification of the query would be helpful

Possible improvements:

Re-ranking of documents after retrieval.

Have a chat model that you can converse with in multi-turn conversations rather than just a QA bot.