2024

SUM

Research Project:

Multilingual Evidence Against Hallucinations

Mentor: Professor Philipp Koehn (Johns Hopkins University).

Bullets:

- Developed a Retrieval-Augmented Generation (RAG) system to enable cross-linguistic data re-trieval, aiming to reduce hallucinations in LLM responses.

- Constructed a multilingual dataset (around 15k) using MegaWika and implemented LASER, an encoding tool introduced by Meta, for encoding to enhance the RAG system’s functionality.

- (WIP) Evaluating the data quality of MegaWika using the x-sim tool introduced in LASER.

What I did:

- Summer Research about multilingual and RAG

- Prepare for capstone proposal (due early August)

- Explore future research direction.

What I thought:

Some drafts of my future research direction:

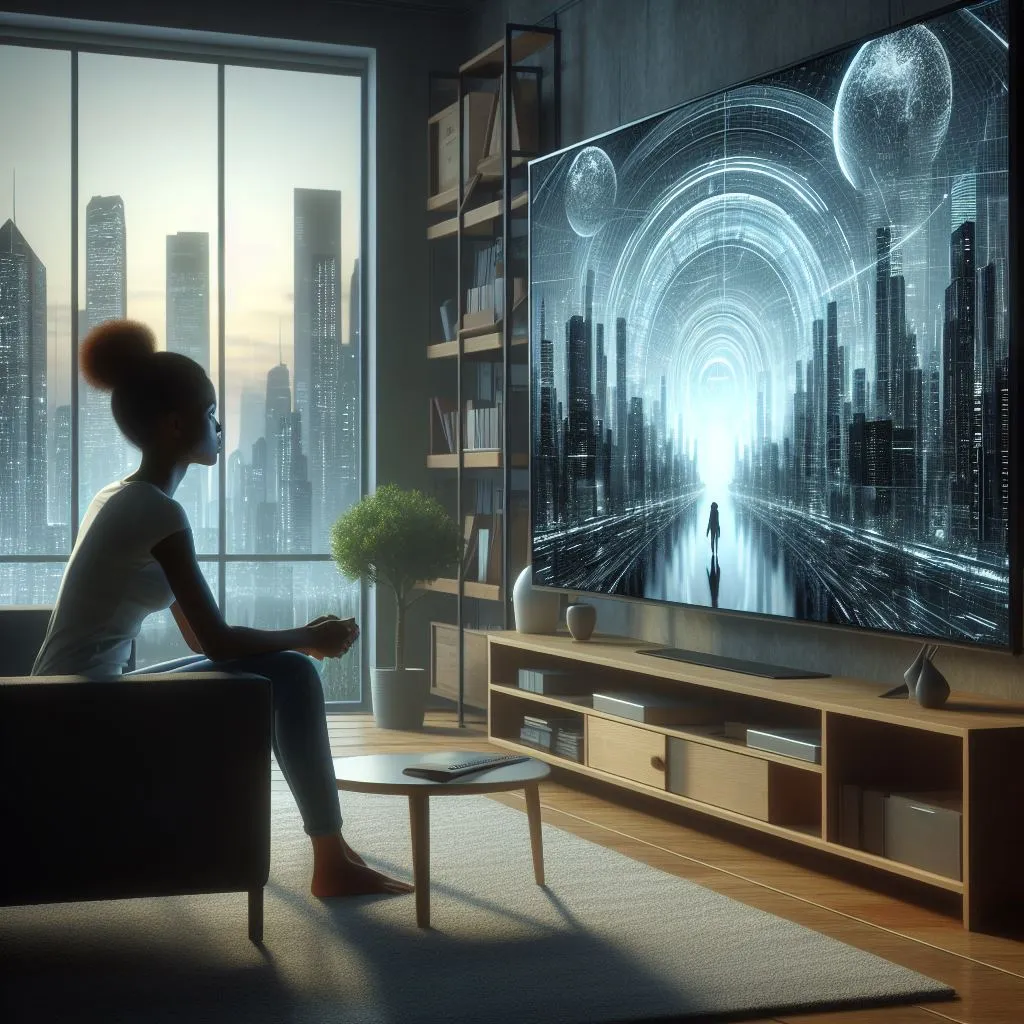

Safty and responsible AI

Want to make sure the output provided by AI and LLMs are evidence-based.While design game characters/scenes, the output picture generated should use evidence-proved elements. E.g., I want to design a character who is a British Gentleman, he should not where a Japanese traditional customs.

Not only for LMs, this also could apply for video, audio, and images.

Multi-languages Tasks

Text, audio, or images.- For text: RAG is available.

- Audio multi-languages recognition is also considerable.

My research partner finds that, when he tried to play Honor of Kings(王者荣耀国际服), since the server is facing for all players around the world, people might speak different languages. However, the audio-to-text might not recognize what language you speak.

Overall think big and more diverse

multimodal: text-to-image, audio-to-text, and so on.

multi-languages

Under those situation, will LLMs and AI models still be effective?Lifelong ML

Model can be updated by inputing more updated data as time goes on.

What I read:

Will plan to collect all readings in notion.